Reproducibility

Overview

Reproducibility ensures that machine learning models produce consistent and reliable results. It allows others to replicate experiments, verify findings, and collaborate effectively.

- Reduces bias and improves research integrity

- Builds confidence in model accuracy

- Supports collaboration and knowledge sharing

Using MLflow

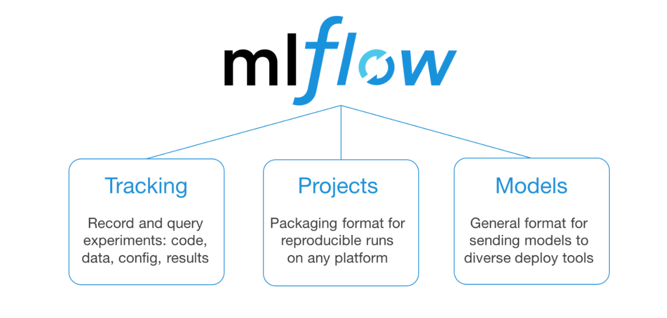

MLflow is an open-source tool for tracking and managing ML experiments. It helps log dependencies, code versions, and experiment settings, which makes it easy to reproduce ML workflows.

- Tracks code, models, and metrics

- Supports collaboration with shared experiment logs

- Integrates with tools like scikit-learn

Example: MLflow with Scikit-Learn

MLflow makes tracking model training simple. Below is an example of using MLflow to log a RandomForest model.

import mlflow

import mlflow.sklearn

from sklearn.ensemble import RandomForestClassifier

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

# Load data

X, y = load_iris(return_X_y=True)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Start MLflow run

with mlflow.start_run():

model = RandomForestClassifier(n_estimators=100)

model.fit(X_train, y_train)

# Log model and parameters

mlflow.sklearn.log_model(model, "model")

mlflow.log_param("n_estimators", 100)

# Log metrics

accuracy = model.score(X_test, y_test)

mlflow.log_metric("accuracy", accuracy)

print("Model logged with MLflow!")

MLflow logs the model, parameters, and accuracy, making it easy to track and compare runs.

Tracking Code with MLflow

MLflow helps track code versions and changes, and ensures experiments can be exactly reproduced.

- Logs code used in experiments

- Helps debug and troubleshoot issues

- Identify version of code used to produce results

- Enables comparison of different model versions

Model Registry

A model registry stores and manages different versions of ML models along with metadata.

- Logs model versions and performance metrics

- Allows easy rollback to previous models

- Used for comparing models and repdocuing ML pipelines

- Ensures consistency in production deployments

Experiment Reproducibility

MLflow logs key elements of an experiment:

- Input data

- Code and dependencies

- Model settings and results

This allows others to validate findings and replicate results reliably.

Importance of Documentation

Clear documentation is essential for reproducibility.

- Include input data, code, and settings

- Keep records updated and accessible

- Ensure others can understand and build upon your work

By following these principles, ML experiments become reliable, transparent, and easy to reproduce.