Measuring AI Success

Overview

It’s important to know if an AI system is actually successful. Success means both reaching business goals and showing measurable performance improvements.

- Defines if goals are achieved

- Monitors performance before and after deployment

- Ensures return on investment is positive

By tracking results carefully, we confirm that AI creates real value and not just theoretical results.

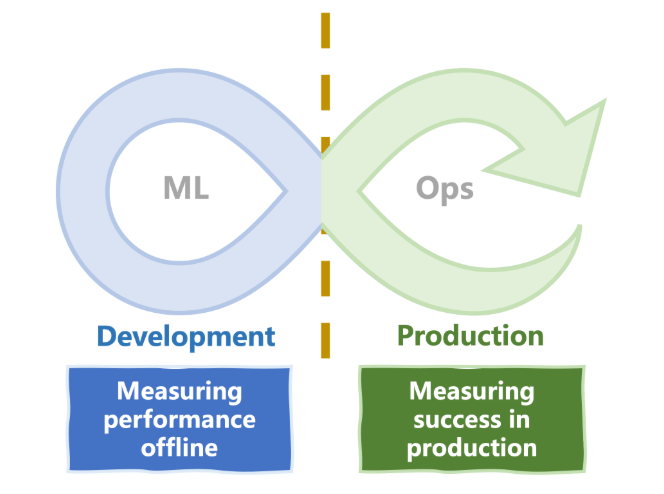

When to Measure Success

Measuring AI success is not a one-time activity.

- During development to check early performance

- After deployment in production

- Continuously through monitoring processes

This ongoing measurement makes sure AI stays effective over time.

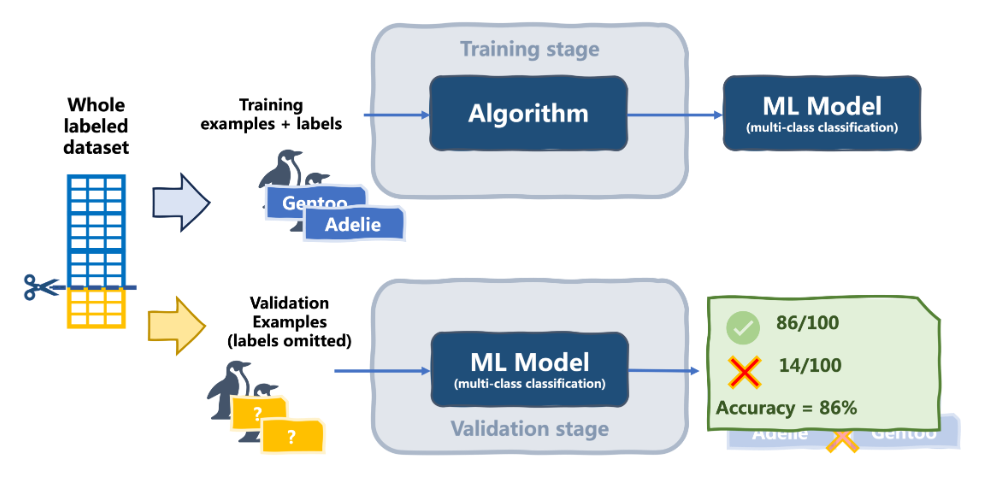

Measuring Performance Offline

Before deployment, models are tested with metrics to check how accurate and reliable they are.

-

Training Stage

- Example: Classify penguin species using labeled data

- We use part of the dataset to train the model

- earn patterns that separate one species from another

-

Validation Stage

- The remaining data is set aside for validation.

- The model predicts species for these unseen penguins

- We compare its predictions with the true labels.

- Some predictions will be correct, others wrong.

In short, accuracy measures how often the model gets the answer right on new data. This gives a clear picture of its performance before moving to production.

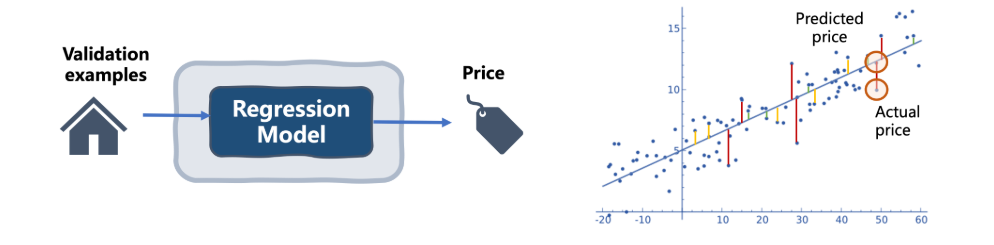

Beyond Accuracy

Depending on the problem and type of solution, there are other important metrics to monitor.

- Regression models focus on prediction error

- Recommendation systems measure ranking and relevance

- Search engines may look at user diversity and satisfaction

If performance is poor, the solution may need better data, fine-tuning, or adjustments. Metrics guide improvements to reach acceptable performance.

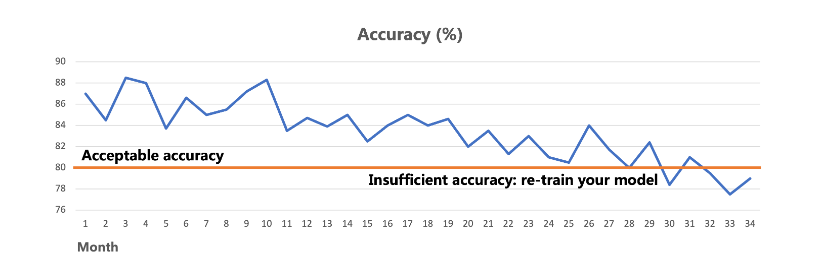

Measuring Success in Production

Once deployed, models must still be monitored.

- Watch performance metrics over time

- Align results with business KPIs

- Detect model degradation when data changes

Model degradation occurs when real-world data no longer matches the data used for training. For example, if the type of incoming data changes, the model may need to be retrained.

Key Performance Indicators help connect AI performance to actual business success, making the system more valuable in the long term.

Risks in AI Systems

AI projects carry risks that must be managed.

- Data bias causing unfair results

- Lack of transparency in decisions

- Ethical issues with data usage

- System reliability and robustness

- Vulnerability to security threats

A good way to reduce risk is starting with a Proof-of-Concept. This pilot version tests feasibility and potential before full deployment.