Experiment Tracking

Overview

A machine learning experiment involves training and evaluating multiple models to find the best one. Tracking experiments helps keep things organized and ensures consistency.

- Compare Results: See which model performs best.

- Reproduce Experiments: Repeat tests with the same settings.

- Collaborate: Share progress with teammates.

- Report Findings: Provide clear updates to stakeholders.

In machine learning experiments, we test different models like linear regression or neural networks. We can also adjust settings, use various datasets, and run different scripts with specific environment setups.

![]()

Without experiment tracking, you may face challenges like:

- Difficulty in reproducing experimental results

- Increased time spent in debugging and troubleshooting

- Lack of transparency in the model development process

Tracking Experiments

Different tracking methods depend on the complexity of the project.

-

Manual Tracking

- Use spreadsheets to log model details.

- Works for small projects but becomes tedious with more experiments.

- Requires a lot of manual work.

-

Custom Experiment Platform

- Proprietary platform as custom solution.

- Build a system to track experiments automatically.

- Flexible but requires time and effort to develop.

-

Experiment Tracking Tools

- Use existing tools to log results efficiently.

- Requires learning but is the best option for large projects.

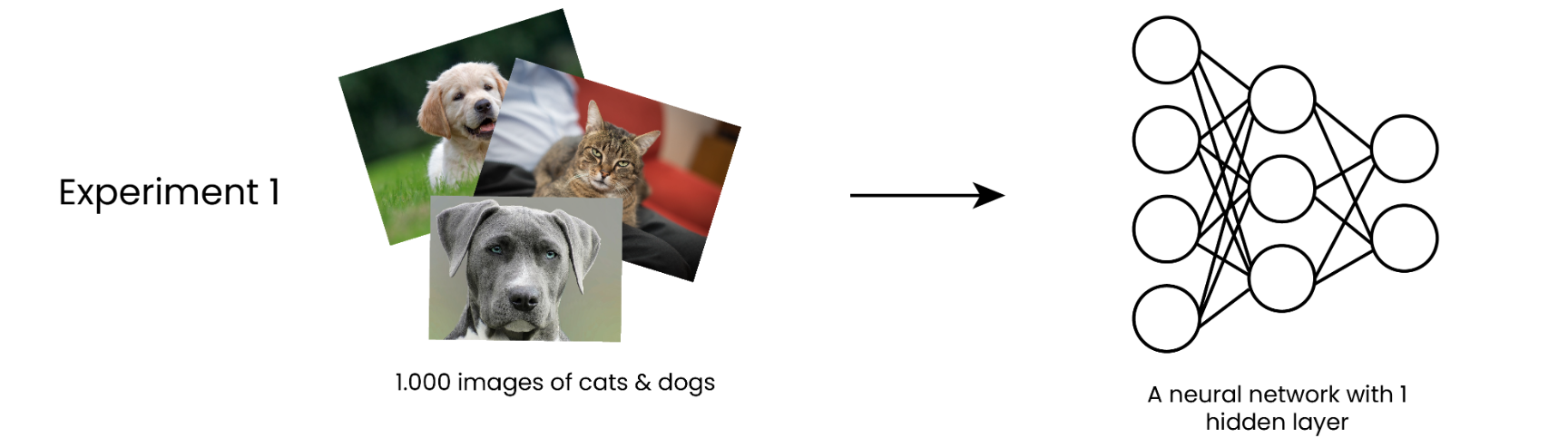

Example: Training a Model

Suppose we're classifying images as dogs or cats.

-

First Experiment

-

Train a neural network with one hidden layer.

-

Use 1,000 images of dogs and cats.

-

-

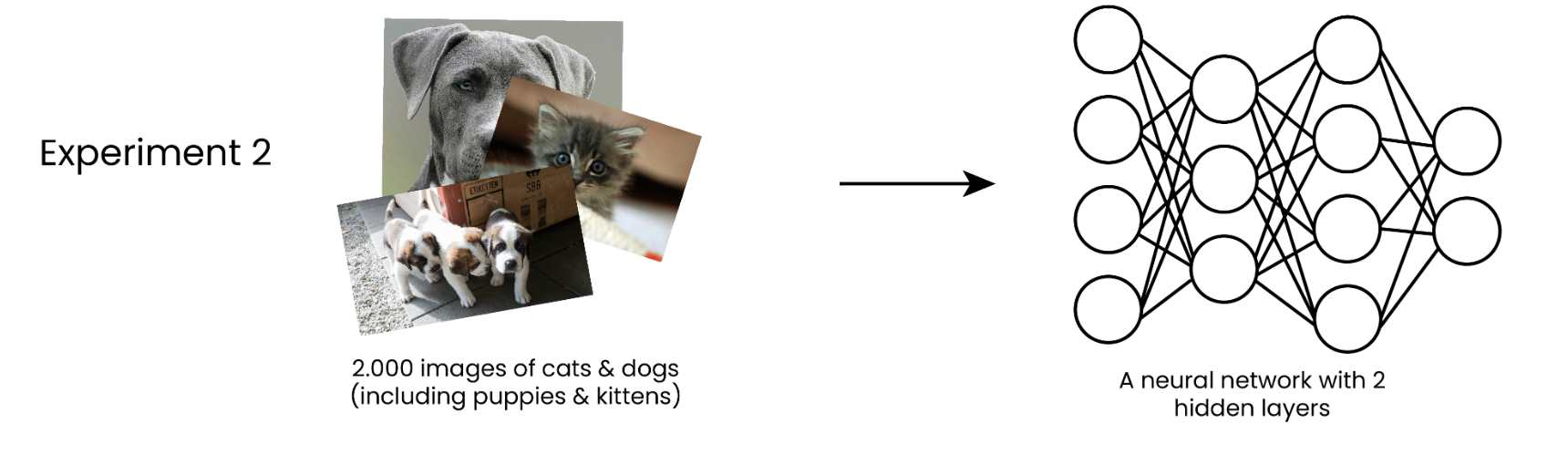

Second Experiment

-

Add puppy and kitten images, increasing data to 2,000 images.

-

Use a deeper model with two hidden layers.

-

Experiment Process

A machine learning experiment follows these steps:

- Define Hypothesis: What do we want to test?

- Gather Data: Collect and prepare datasets.

- Set Hyperparameters: Choose model settings like layers or learning rate.

- Enable Tracking: Log model versions, datasets, and configurations.

- Train and Evaluate: Run models and compare results.

- Register Best Model: Save details of the best-performing model.

- Visualize and Report: Share findings with the team.