AI Prompt Decorator Plugin

Overview

The AI Prompt Decorator plugin in Kong helps tailor API responses to meet specific needs, such as enhancing prompts for AI models or modifying request/response content dynamically.

- Customize API responses to suit AI model requirements.

- Dynamically modify request or response content for better performance.

- Simplify integration with AI-powered services.

Before proceeding, ensure the AI Proxy plugin is enabled in your Kong instance.

Lab Environment

This lab requires OpenAI credits. You must first create an OpenAI account and purchase credits.

This lab tests a Kong API Gateway deployment using a FastAPI endpoint. To simplify, both the containerized Kong API Gateway and the FastAPI endpoint are installed locally on a Windows 10 machine. A Docker Compose file is used to deploy Kong, along with other applications like Prometheus, Zipkin, the ELK Stack, and more.

Make sure that you have installed Docker Desktop.

Simply installing Docker in WSL2 without Docker Desktop may introduce some issue when configuring the communication between the containerized Kong API Gateway and the FastAPI application that is installed on the local host.

Pre-requisites

- Postman

- Setup the Kong API Gateway

- Kong Manager OSS Access

- Enable AI Proxy plugin

- Configure the Service and Route

Enable the Plugin

To enable the plugin, we can do it in the Kong Manager console or we can also run the curl command in your terminal:

curl -i -X POST http://localhost:8001/routes/openai-route/plugins \

--header "accept: application/json" \

--header "Content-Type: application/json" \

--data '

{

"name": "ai-prompt-decorator",

"config": {

"prompts": {

"append": [

{

"role": "system",

"content": "You will respond in Filipino or Tagalog"

}

]

}

}

}'

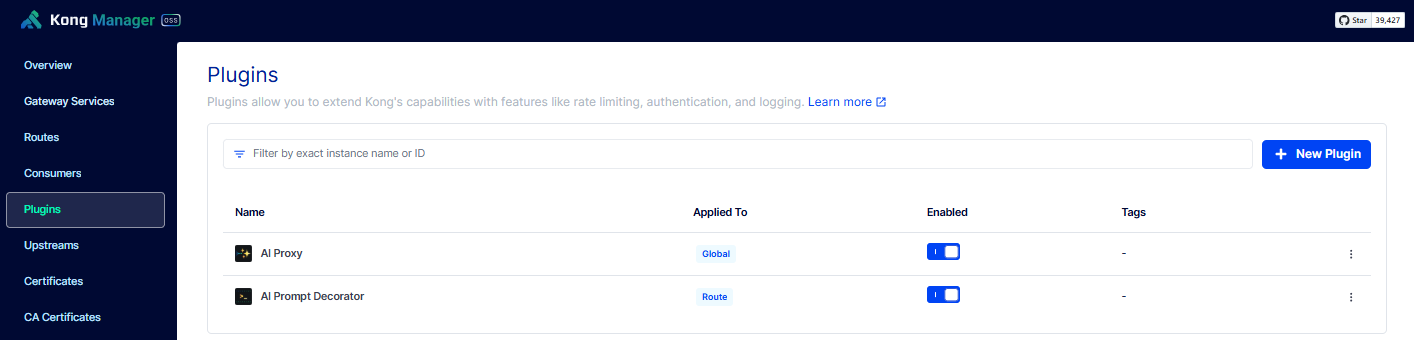

Login to the Kong Manager and confirm that the AI Prompt Decorator plugin is enabled:

Testing the Plugin

Now, open Postman and play around.