Hyperparameter Tuning

Hyperparameters

Hyperparameters are values set before training that contorl the learning process.

- Hyperparameters must be chosen carefully

- They significantly influence the model's accuracy.

- Cannot be learned from data during training

Model parameters, like weights and biases, are learned during training. Hyperparameters, on the other hand, must be set before training begins. Examples include:

- Model Architecture in a Neural Networ;

- Number of branches in a decision tree

- Learning rate

Hyperparameter Tuning

Hyperparameter tuning is used to improve the performance of machine learning models.

- Adjusting hyperparameters to improve model performance

- Focus on factors like learning rate, number of layers, or tree depth

- Common starting point for model optimization

Tuning helps find the best combination of hyperparameters for the model.

Hyperparameter Tuning Methods

Different methods offer distinct approaches to hyperparameter tuning.

-

Grid Search

- Tests all combinations from a predefined grid

- Exhaustive but time-consuming

-

Random Search

- Randomly tests hyperparameter combinations

- Faster than grid search but can miss optimal solutions

-

Bayesian Search

- Uses Bayesian optimization to refine hyperparameter selection

- Efficient as it learns from past trials to guide future searches

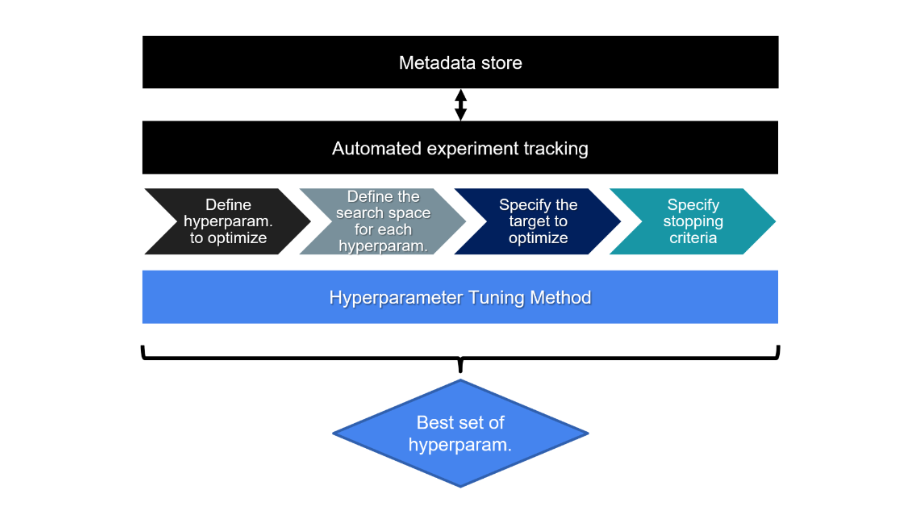

Automated Hyperparameter Tuning

Manually finding the best hyperparameter combination is time-consuming. Automating this process helps find the best set of hyperparameters efficiently.

- Saves time and effort

- Leads to better-performing models

- Ensures optimal hyperparameter selection

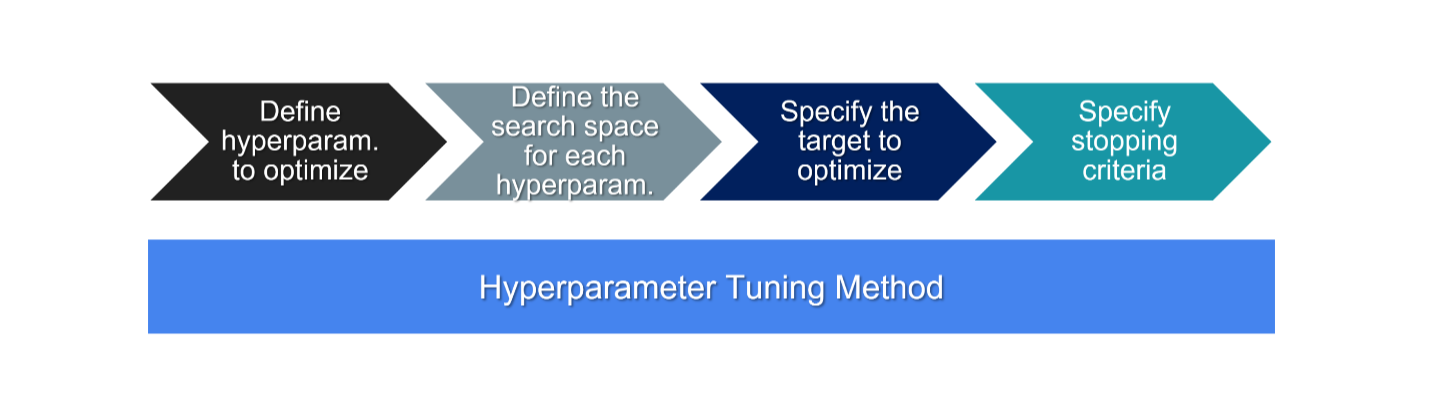

Steps for Automated Tuning

These steps guide the automated tuning process to find the best model parameters.

- Define hyperparameters to tune

- Set search space (discrete values or ranges)

- Choose a performance metric (e.g., recall, precision)

- Set stopping criteria (e.g., maximum trials)

Environment Symmetry

Consistent settings across environments lead to reliable model performance.

- Ensures model behaves consistently across environments

- Prevents unexpected behavior when deploying models

Tracking Experiments

Automated tracking logs all tuning details to help manage experiments.

- Automate tracking of hyperparameter experiments

- Record all hyperparameter combinations explored

- Store metadata for later reference

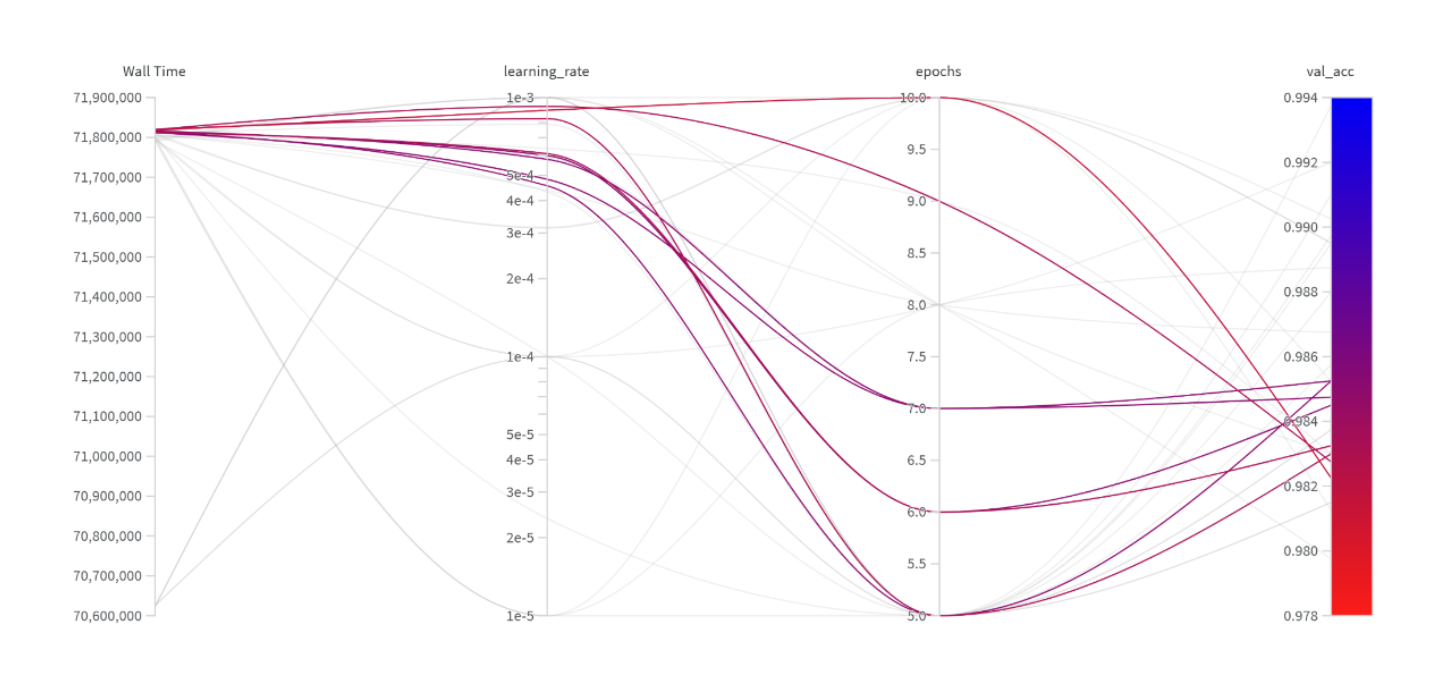

Hyperparameter Visualization

Visualization helps in interpreting the effects of hyperparameter changes.

- Understand the impacts of different hyperparameters

- Helps analyze performance variations with different values