Data Integration

Overview

Data integration combines information from different databases, formats, and technologies to create a unified view. As organizations grow, data often becomes scattered across various systems, making integration essential for a cohesive data strategy.

- Involves merging diverse data formats, databases, and technologies

- Provides a unified and comprehensive view of information across systems

Real-World Applications

There are several common scenarios where data integration is utilized:

- 360-degree customer view to unify customer information stored in different databases

- Merging different company databases to simplify operations after a merger

- Connecting legacy and modern systems to allow querying across all data sources at once

Designing the Unified Data Model

A unified data model is necessary to achieve effective integration. This model serves as the foundation for various use cases, such as building dashboards or developing data-driven products.

When designing the model:

- Define the purpose, such as creating visual dashboards

- Examples: Daily sales charts or products like recommendation engines

- Final data model is fast and scalable enough to meet the use case requirements

Data Sources and Formats

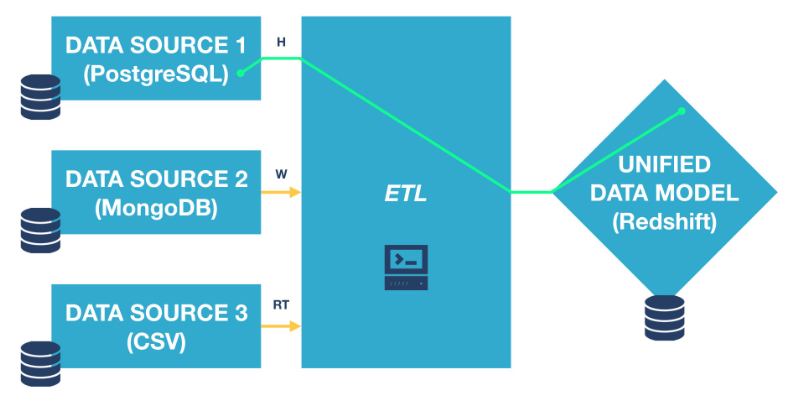

The next step is to identify the data sources that hold the required information. These sources could be in various formats, such as:

- Databases like PostgreSQL or MongoDB

- Files like CSVs

Understanding the format of each source and determining how we may combine the sources helps in planning the data integration process more effectively.

Update Cadence for Data

Decide how frequently you need to update the integrated data. The cadence may vary based on the type of data being handled:

- For sales data, daily updates might be sufficient

- For applications like air traffic monitoring, continuous and real-time updates are necessary

It's common to have different update frequencies for different sources, so integration should account for this variation.

- Weekly?

- Daily?

- Hourly?

- Real-time?

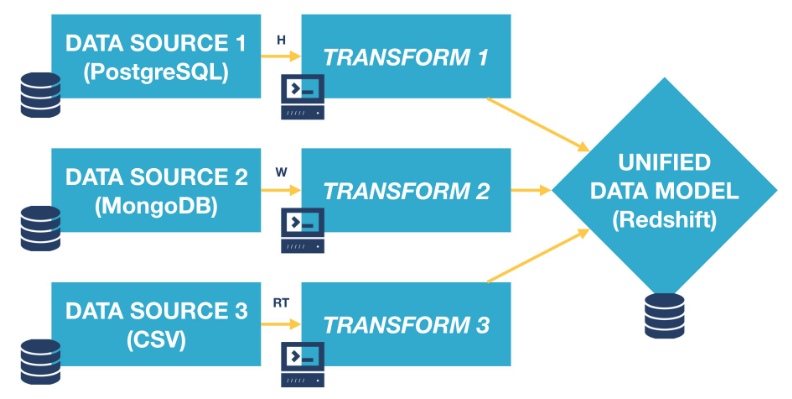

Handling Data Transformations

After determining the frequency of updating the data, the next step is to know how we can transform the data from different source into a format compatible with the unified data model. Transformations involve extracting data from one format and converting it into another to fit the target model.

This can be done manually by coding transformations for each source, but it often requires ongoing maintenance.

Using Data Integration Tools

To streamline the data transformation process, data integration tools are commonly used. These tools provide automated extract, transform, and load (ETL) processes. Examples of such tools include:

- Apache Airflow

- Scriptella

Choosing the right tool for data integration is critical for long-term success. The tool should be:

- Flexible enough to connect with all your data sources

- Reliable, ensuring it can be maintained for years to come

- Scalable, allowing for future growth in data volume and new sources

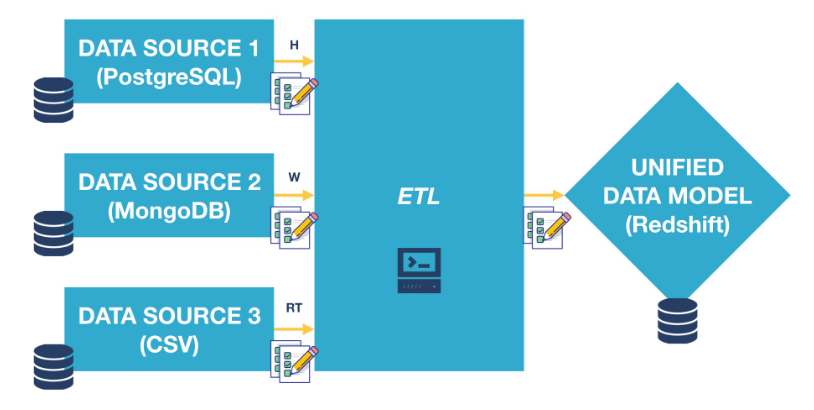

Automated Testing and Alerts

When integrating data, it’s important to ensure data integrity. Automated testing and alerts can help maintain data quality:

- After each transformation, aggregate the data to ensure it aligns with expectations, such as total sales figures remaining consistent

- If any discrepancies arise during transformation, proactive alerts can notify the team to address issues before they escalate

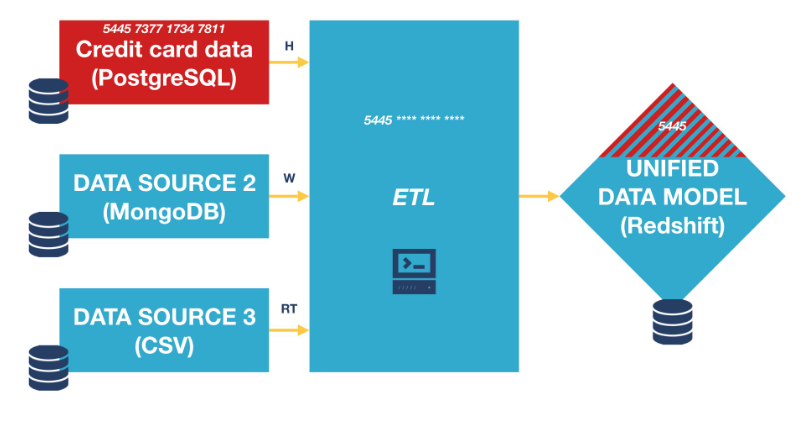

Security and Data Access

Maintaining security during data integration is essential, especially when dealing with sensitive information. For example, data access restrictions should be preserved in the unified model, ensuring that only authorized users have access to sensitive data.

An example of this is anonymizing credit card information during the ETL process. Analysts may only need to see the first four digits of the card to determine its type, while the rest of the data remains hidden.

Data Governance and Lineage

Effective data governance means keeping track of data origins and how data is used during integration. This is important for audits and following data management rules.